WNN : File Format for Neural Network Interchange

Keywords:

Artificial neural networks, File format, Neural network model sharing, Neural network model savingAbstract

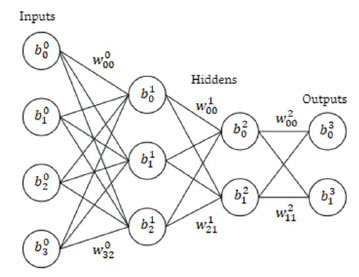

A programming language agnostic, neural network library agnostic, standardized file format used to save an already trained neural network model would facilitate the process of sharing or releasing said neural network model online in a simplistic fashion. A standard file format for saving neural network models would include metadata about the neural network such as the number of inputs, hidden layers, nodes per hidden layer, outputs and the activation function used along with the weights and bias values. Such a file can be parsed to reconstruct a neural network model in any programming language or library and would remove a neural network model's dependency on the library it was created on.

References

Clarkson, T. G. (1996). Introduction to neural networks. Neural Network World, 6(2), 123-130. doi:10.1201/9781482277180-13

Erickson, B. J., Korfiatis, P., Akkus, Z., Kline, T., & Philbrick, K. (2017). Toolkits and libraries for deep learning. Journal of Digital Imaging, 30(4), 400-405. doi:10.1007/s10278-017-9965-6

Hornik, K., Stinchcombe, M., & White, H. (1989). Multilayer feedforward networks are universal approximators. Neural Networks, 2(5), 359-366. doi:10.1016/0893-6080(89) 90020-8

McCulloch, W. S., & Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. The Bulletin of Mathematical Biophysics, 5, 115-133. doi:10.1007/BF 02478259

Poggio, T., & Girosi, F. (1990). Networks for approximation and learning. Proceedings of the IEEE, 78(9), 1481-1497. doi:10.1109/ 5.58326

Rosenblatt, F. (1958). The perceptron: A probabilistic model for information storage and organization in the brain. Psychological Review, 65(6), 386-408. doi:10.1037/ h0042519

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323(6088), 533-536. doi:10.1038/323533a0